Indian American researcher develops AI tools to monitor human rights

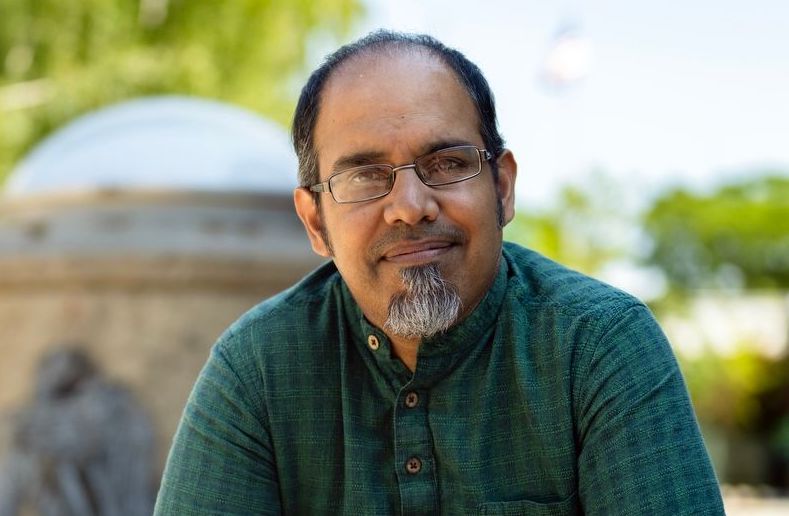

Rahul Bhargava

Rahul Bhargava, an Indian American professor of journalism and art/design at Northeastern University, and his longtime collaborator, Catherine D’Ignazio of MIT, are building artificial intelligence tools to monitor alleged civil and human rights violations.

Incidents involving civil and human rights violations incidents happen every day across the United States. however, the data on them is often not officially monitored, says Bhargava. Instead, grassroots organizations gather information from media reports about alleged civil and human rights violations, according to a story on the university site.

The incidents include gender- or identity-based killings, police killings, attacks motivated by white supremacy, mass shootings and pregnancy criminalization — when a person is arrested for reasons related to their pregnancy. “These problems matter and have significant impact on the world around us,” Bhargava says.

Bhargava and his students at Northeastern previously developed AI tools to monitor news reports on alleged intentional killings of women in North and South America for D’Ignazio’s Data Against Feminicide project.

READ: 19-year-old launches artificial intelligence research organization (February 14, 2023)

They worked with such organizations as Sovereign Bodies Institute on tracking missing or murdered Indigenous women and girls, African American Policy Forum on tracking police violence against Black women and girls, and Feminicidio Uruguay on tracking gender-based killings in Uruguay.

With a new grant from the Data Empowerment Fund, Bhargava is managing interactions with a broader set of grassroots groups from the US, Mexico, Uruguay, Brazil and Argentina. Bhargava and his students collaborate with each group on designing the best workflow and creating a set of articles to train the AI model.

“We build a new model for every group we work with,” Bhargava says. “Many of these groups have very nuanced definitions of the types of cases they care about. So it’s an argument that says that AI is a suite of tools and techniques that can be wielded for people that have very custom needs and developed with them.”

The tools combine media databases, machine learning algorithms and email alerts to report possible cases.

First, the model ingest large sets of news stories from multiple online news archives such as Media Cloud and NewsCatcher. The stories are then filtered by keywords and sent through a custom AI classifier model — or an algorithm — that automatically categorizes data into one or more sets.

The model checks archives three times a day and delivers the results via email. A live dashboard allows real-time story monitoring as well. The model clusters similar stories, so the activists don’t need to read them all.

“You’re getting a larger funnel without exposing them to all of these articles about violence,” Bhargava says. Some partners, he says, have cut their data extraction time from six hours to one hour.

Sometimes groups’ goals and the definitions of what they’re looking for overlap, Bhargava says, so they can reuse an existing model to try to find similar stories.

For instance, a group that cares about killings of women based on gender in Argentina might be able to use the same model as a group that cares about the same crimes in Uruguay. They could use the same model. However, some might care about pieces that are connected to specific identities of the victim or the perpetrators.

“That’s the kind of thing that would need a new model to be built,” he says.

The code is open source, Bhargava says, which means it is publicly accessible and available for use, modification or distribution to other interested groups and organizations.

Usually, human rights grassroot groups spend hours online reading the news and compiling stories — a tedious and emotionally taxing process. They use inadequate tools, Bhargava says, like Google News Alerts that often return too many irrelevant stories.

The groups use this data to raise awareness, provide legal help and advocate for new policies to protect vulnerable populations.

“In many countries, this kind of data isn’t [officially] collected [by the government], and these groups are filling a data hole,” Bhargava